🤔 Why Is Redis Much More Than a Cache?

The versatility of Redis lies in its support for multiple data structures:

- Strings: simple values, ideal for counters and basic cache.

- Hashes: object-like models (key → field map).

- Lists: queues, stacks and simple streams.

- Sets: non-duplicated collections, useful for relationships.

- Sorted Sets (ZSET): rankings and score-based ordered lists.

- Bitmaps and HyperLogLog: compact analytics and approximate counting.

Since it lives entirely in RAM, it offers latency in the order of microseconds, enabling real-time applications even under massive loads. Its operations are also atomic, simplifying concurrency logic without manually locking resources.

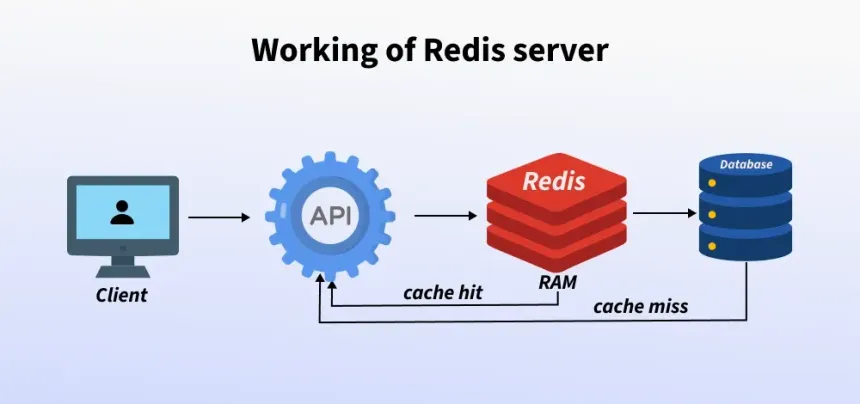

Redis does not try to replace your primary database — it complements it: it sits between your application and your “slow” data sources, acting as a layer of high performance, coordination, and ephemeral state.

💡 10 Advanced Use Cases with Technical Implementation

1. Database Query Caching

Technical context: Heavy relational queries, especially those with multiple JOIN, can become bottlenecks and degrade application throughput.

Redis solution: Implement a query cache, storing the serialized result (e.g., JSON) using the SQL query or a hash of it as key.

// Example using node-redis

import { createClient } from 'redis';

const client = createClient();

await client.connect();

async function getProductsByCategory(categoryId) {

const cacheKey = `query:products:category:${categoryId}`;

// Attempt to retrieve from cache

const cachedResult = await client.get(cacheKey);

if (cachedResult) {

console.log('Cache Hit - Returning from Redis');

return JSON.parse(cachedResult);

}

// Cache Miss - Query primary DB

console.log('Cache Miss - Querying DB');

const dbResult = await db.query(

'SELECT * FROM products WHERE category_id = ?',

[categoryId]

);

// Store in cache with TTL

await client.set(cacheKey, JSON.stringify(dbResult), {

EX: 3600 // 1 hour expiration

});

return dbResult;

}

Benefits: Drastically reduces latency, unloads the primary DB, and handles traffic spikes without melting the relational engine.

Best practices:

- Invalidate cache when relevant data changes (e.g., via events or queues).

- Avoid long TTL in highly dynamic data.

- Use consistent, versioned keys (e.g.,

v1:query:products:category:123).

2. User Session Store

Technical context: In architectures with multiple application instances (behind a load balancer), session state cannot live in local memory.

Redis solution: Use Redis as a centralized, high-speed session store with built-in TTL support.

// express-session integration

import session from 'express-session';

import RedisStore from 'connect-redis';

const redisStore = new RedisStore({

client: client,

});

app.use(session({

store: redisStore,

secret: process.env.SESSION_SECRET,

resave: false,

saveUninitialized: false,

cookie: {

secure: 'auto',

maxAge: 1000 * 60 * 60 * 24 // 1 day

}

}));

// Session store using Hash

async function updateUserSession(userId, sessionData) {

const sessionKey = `user:session:${userId}`;

await client.hSet(sessionKey, {

lastLogin: new Date().toISOString(),

userAgent: sessionData.userAgent,

ip: sessionData.ip

});

await client.expire(sessionKey, 86400); // 24h TTL

}

Benefits: Consistent sessions in distributed architectures, high availability, and the ability to mass-invalidate sessions (e.g., after a policy change).

3. Real-Time Pub/Sub Messaging System

Technical context: Apps that require instant notifications, chat, or real-time updates (dashboards, feeds, etc.).

Redis solution: Use the native Publisher/Subscriber (Pub/Sub) pattern.

// Subscriber

const subscriber = client.duplicate();

await subscriber.connect();

await subscriber.subscribe('notifications:user:123', (message) => {

console.log(`Notification received: ${message}`);

});

// Publisher

const publisher = client.duplicate();

await publisher.connect();

await publisher.publish(

'notifications:user:123',

'You have a new message!'

);

Benefits: Low-latency, scalable real-time communication between services.

Note: Native Pub/Sub does not store messages. For durability or retries, combine Redis with queues or streams.

4. Rate Limiting and API Protection

Technical context: APIs must be protected from abuse, brute-force attacks and ensured fair usage.

Redis solution: Implement a rate limiter using atomic commands like INCR and EXPIRE.

class RateLimiter {

constructor(redisClient) {

this.client = redisClient;

}

async isAllowed(userId, endpoint, maxRequests, windowSeconds) {

const key = `ratelimit:${userId}:${endpoint}`;

// Pipeline for atomicity: INCR + EXPIRE

const multi = this.client.multi();

multi.incr(key);

multi.expire(key, windowSeconds, 'NX'); // Only set TTL if not existing

const results = await multi.exec();

const requestCount = results[0];

return requestCount <= maxRequests;

}

}

// Usage

const limiter = new RateLimiter(client);

const allowed = await limiter.isAllowed('user123', '/api/login', 5, 300); // 5 attempts per 5 min

if (!allowed) {

throw new Error('Rate limit exceeded');

}

Benefits: Effective defense against attacks, controlled resource usage, and distributed rate enforcement.

Advanced variants: sliding windows, temporary IP blocks, combined limits by user+endpoint+IP.

5. Shopping Cart Storage

Technical context: In e-commerce, the shopping cart is temporary but critical: it requires fast access, consistency and auto-expiration.

Redis solution: Model the cart as a Redis Hash where each field is productId → quantity.

async function addToCart(userId, productId, quantity) {

const cartKey = `cart:${userId}`;

await client.hSet(cartKey, productId, quantity);

await client.expire(cartKey, 604800); // 1 week

}

async function getCart(userId) {

const cartKey = `cart:${userId}`;

return await client.hGetAll(cartKey);

}

Benefits: Ultra-fast performance, automatic expiration of temporary state, and reduced load on transactional DBs.

6. Atomic Counters and Real-Time Statistics

Technical context: Systems requiring concurrent increments (views, likes, shares) without race conditions.

Redis solution: Use INCR, INCRBY and HINCRBY, which are atomic by design.

async function trackVideoView(videoId) {

const key = `video:stats:${videoId}`;

// Increment multiple counters atomically in a Hash

const multi = client.multi();

multi.hIncrBy(key, 'totalViews', 1);

multi.hIncrBy(key, 'viewsToday', 1);

await multi.exec();

}

async function getVideoStats(videoId) {

const key = `video:stats:${videoId}`;

return await client.hGetAll(key);

}

Benefits: Data consistency in high-concurrency environments and extreme speed for real-time analytics.

7. Leaderboards with Sorted Sets

Technical context: Games or platforms that require real-time scoring, rankings, reputation or achievements.

Redis solution: Sorted Sets automatically maintain score-ordered members.

async function updatePlayerScore(playerId, score) {

await client.zAdd('game:leaderboard', {

value: playerId,

score: score

});

}

async function getTopPlayers(limit = 10) {

return await client.zRangeWithScores(

'game:leaderboard',

0,

limit - 1,

{ REV: true } // Descending

);

}

Benefits: Complex ranking queries in O(log N) and always-sorted data without post-processing.

8. Asynchronous Task Queues

Technical context: Offload heavy processes (emails, image processing, notifications) to avoid blocking user response.

Redis solution: Implement a simple queue using Lists with LPUSH/BRPOP.

// Producer

async function addEmailTask(emailData) {

await client.lPush('queue:emails', JSON.stringify(emailData));

}

// Consumer worker

async function processEmailQueue() {

while (true) {

const task = await client.brPop('queue:emails', 0);

const emailData = JSON.parse(task.element);

await sendEmail(emailData);

}

}

Benefits: Service decoupling, controlled retries and dramatically improved responsiveness.

9. Caching Results of Expensive Computations

Technical context: Heavy computations (data processing, ML models, complex aggregations) are expensive and reused frequently.

Redis solution: Cache the result using a hash derived from input parameters.

import crypto from 'crypto';

async function getExpensiveCalculation(inputParams) {

const inputHash = crypto.createHash('md5')

.update(JSON.stringify(inputParams))

.digest('hex');

const cacheKey = `calc:${inputHash}`;

const cachedResult = await client.get(cacheKey);

if (cachedResult) return JSON.parse(cachedResult);

const result = await intensiveCalculation(inputParams);

await client.set(cacheKey, JSON.stringify(result), { EX: 3600 });

return result;

}

Benefits: Significant CPU savings, reduced costs, improved UX.

10. External API Response Caching

Technical context: Third-party APIs often have high latency and strict rate limits.

Redis solution: Cache responses, respecting refresh times and cache headers.

async function fetchWithCache(apiUrl) {

const cacheKey = `api:${apiUrl}`;

const cached = await client.get(cacheKey);

if (cached) return JSON.parse(cached);

const response = await fetch(apiUrl);

const data = await response.json();

// Cache for a while

await client.set(cacheKey, JSON.stringify(data), { EX: 600 }); // 10 min

return data;

}

Benefits: Lower external latency, protection against rate limits, and resilience to provider downtime.

🏗️ High-Traffic Company Use Cases

- Twitter: Uses Redis to manage user timelines. When a user with millions of followers posts, Redis updates follower lists extremely efficiently.

- Pinterest: Stores the social graph (who follows who) and user boards, requiring fast access to highly queried relationships.

- Trello: Uses Redis for all ephemeral information shared quickly across servers (e.g., temporary board and card states in real time).

- Flickr: Uses it as the backbone for its async task queues for image processing and push notifications.

🔧 Production Configuration and Best Practices

Node.js + TypeScript Connection

import { createClient, RedisClientType } from 'redis';

// TypeScript client creation

const client /** @type {RedisClientType} */ = createClient({

url: `redis://${process.env.REDIS_USER}:${process.env.REDIS_PASSWORD}@${process.env.REDIS_HOST}:${process.env.REDIS_PORT}`,

socket: {

connectTimeout: 60000,

lazyConnect: true

}

});

client.on('error', (err) => console.error('Redis Client Error:', err));

client.on('connect', () => console.log('Connected to Redis'));

await client.connect();

In production, it is recommended to connect Redis through private networks, use strong authentication, enable TLS when available, and monitor latency, memory usage, and key count.

Persistence and Backup Strategies

In production environments, understanding and configuring Redis persistence strategies is critical to minimize data loss:

- RDB (Redis Database): Creates snapshots of the dataset. Ideal for backups and fast recovery. Uses fewer resources but may lose data between snapshots if a crash occurs.

- AOF (Append Only File): Logs every write operation. Provides stronger durability (less potential loss), but uses more storage and can be slightly slower.

The hybrid approach (RDB + AOF) is typically the most robust, balancing performance and durability.

In more advanced topologies, you can combine:

- Replica sets: read-only replicas for high availability.

- Redis Cluster: automatic sharding for horizontal scaling.

- Sentinel: monitoring and automatic master failover.

✅ Conclusion: Redis as a High-Performance Platform

Redis is far more than a simple cache: it's an in-memory data structure platform that allows you to build advanced caching layers, messaging systems, rate limiting, job queues, real-time analytics, and much more.

If you are building a modern application with Node.js (or any other platform), integrating Redis can be the difference between an app that “works” and one that scales with confidence.

The next practical step is to choose one or two of the use cases above, integrate them into a test environment and measure: latency, DB load, CPU usage and user experience. From there, you'll see for yourself why Redis is an essential tool in every developer’s stack.